Technical Information on the 5 Gigapixel Image

96000×54000 pixel - that is 5184000000 pixel or a bit more than 5 Gigapixel. In an uncompressed image format that takes 15 gigabyte of storage space. Still it is of course not difficult per se to produce an image of this size. The difficulty is more to fill it with meaningful content. There are various projects producing photographs of comparable size by stitching together multiple photos. I don't know of any other projects however that attempt to generate a detailed picture of this size using 3D rendering techniques.

To support the claim of being the most detailed image generated using 3d rendering techniques it would of course be necessary to have a measurement for the detail level. Although this image is rendered using a mesh-free rendering technique which makes this hard to compare the geometric detail level is equivalent to more than 10 billion triangles.

An image of this size and detail could be printed in a quality and detail level comparable to a photograph at a size of about 11×6 meter. But to look detailed and accurate even at this scale the scene content, in this case the earth surface representation, has to be sufficiently detailed. This generates a very special demand on the rendering system to handle complex scenes and techniques that work very efficiently to handle the low detail geometry in 3D computer games or computer generated movie sequences do not scale sufficiently well to be used for this purpose.

|

|

|

|

|

|

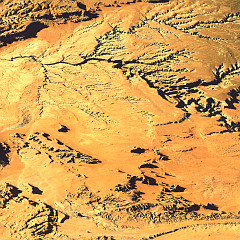

| Zoom into the Monument valley to illustrate the detail level required for a 5 Gigapixel Image. | ||

The approach used to generate the Views of the Earth that is described elsewhere is capable of handling this task. The downside of this is that with raytracing the time required for rendering the image is always directly proportional to the number of pixels. And 5 gigapixel is quite a lot.

Of course the amount of geometry and surface color data is so large that it would not fit into memory of common today's computer systems as a whole. The source data used for generating the image in compressed form is about 20-30 GB in size - during processing this turns into about 100 GB. I therefore divided the image into tiles and renderd each of them with only the data of this region. The full resolution data area has to be sufficiently larger than the tile so non-local effects like shadows and reflections do not generate discontinuities across the tile boundaries. If this is taken into account the resulting tiles can be assembled without visible seams afterwards.

The tile size was chosen so the data sets for each of the tiles would fit into the computer memory (commonly about 1.5 to 2 GB). The rendering time for a tile is about 15-30 hours. That sounds quite a lot but remember each tile is 9600×5400 pixel large - a screen size render would usually be finished in less than an hour.

In total the technique is quite comparable to the stitching of photographs. But in contrast to that there is no need to blend the individual tiles because there are no differences between them due to lighting changes, vignetting, moving objects and geometric inaccuracies. The data processing parameters and the scene lighting and settings have to be the same in all tiles of course.

The data sources

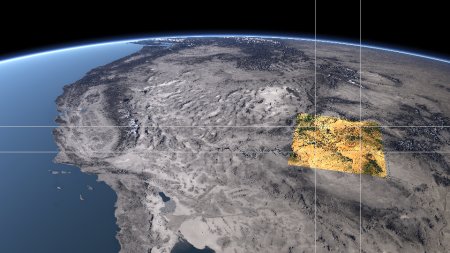

The area shown in the image was chosen with respect to the available data sources. The countries covered by the view: Mexico, the U.S.A and Canada all provide high resolution elevation data for a precise representation of the earth surface geometry without limiting license restrictions. For the reasons why similar data is not available elsewhere, especially in Europe see my essay on data availability. The mentioned data sources offer elevation data of at least 1 arc second resolution for the whole area of the image. That is equivalent to at least 30 meter spatial resolution.

For the surface coloring I use Landsat images that are freely available as well from the Global Land Cover Facility. These images are processed to compensate atmosphere influence and shading. Still the resulting coloring differs quite a lot from the lower resolution Blue Marble datasets that are used for the low resolution renders.

The data processing

Processing of the data essentially works the same way as with the other Views of the Earth images. See the credits page for a list of programs involved in data preparation. These and various self written tools and scripts are used to assemble the data for each tile of the render.

Other large image projects

This is no way the first image of this size produced and as mentioned on top of this page it is not particularly difficult to formally generate such an image. There have been quite a lot of attempts to produce photographs of comparable size by combining several photos or using special cameras. And especially with photographs it should be noted that the nominal resolution does not always have to equal the ability to resolve small details in the photos. Scanning, limited quality of used lenses, sensor noise and reprojection losses can have a significant influence on the quality of the final image.

One of the earliest demonstrations has been the Gigapixel Image of Bryce Canyon by Max Lyons. He uses standard panorama stiching tools to combine more than 100 individual photos.

Another recent project to create large size photographs using special analog cameras and scanning is the Gigapxl Project. They specialize in landscape photographs and have an extensive collection of images.

There are various other mostly experimental attempts to generate large photographs up to 10 Gigapixel.

imagico.de • blog.imagico.de • services.imagico.de • maps.imagico.de • earth.imagico.de • photolog.imagico.de